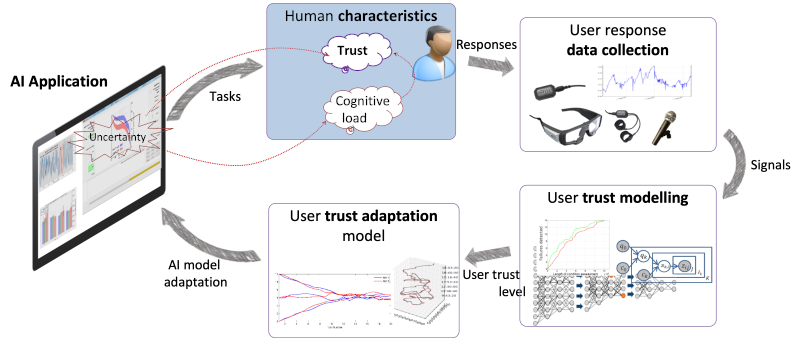

Data analytics-driven solutions are widely used in various intelligent systems, where humans and

machines make deci-sions collaboratively based on predictions. Our research aims to explore the effects

of AI related factors on decision making, such as the decision quality, user trust in decisions.

Furthermore, the imprecision and uncertainty are unavoidably associated with AI outputs and therefore in

the decisions based on them. In human-machine interactions, uncertainty often plays an important role in

hindering the sense-making process and conducting tasks: on the machine side, uncertainty builds up from

the system itself; on the human side, these uncertainties often result in “lack of knowledge for trust”

or “over-trust”. Our research investigates the evaluation of uncertainty in machine learning and the

effects of presentation of uncertainty on human responses in AI-driven decision making.